| S |

|

|---|---|

| O |

|

| L |

|

| I |

|

| D |

|

DevelopTime

Saturday, September 16, 2017

SOLID

SOLID

Thursday, October 15, 2015

MvcScaffolding: Standard Usage

http://blog.stevensanderson.com/2011/01/13/mvcscaffolding-standard-usage/

What is a scaffolder?

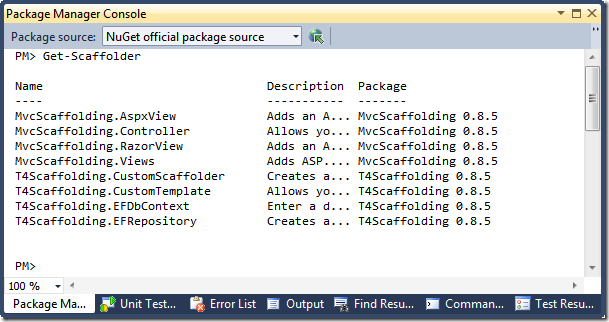

A scaffolder is something that does scaffoldingTo see a list of available scaffolders, issue this command in the Package Manager Console:

Get-Scaffolder

Hopefully, your screen will be a little wider and you’ll actually be able to read the descriptions! Anyway, as you can see, scaffolders can live in NuGet packages and they have unique names like MvcScaffolding.RazorView. You could have lots of different scaffolders that produce views (e.g., you already have MvcScaffolding.AspxView too), and identify them by their unique name. For example, you could choose to scaffold an ASPX view as follows:

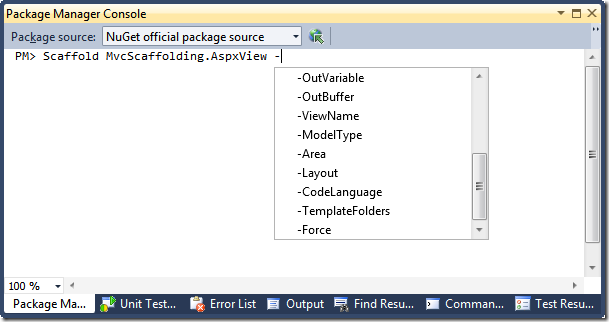

Scaffold MvcScaffolding.AspxView Index Team

Scaffolders can accept their own set of parameters. In PowerShell, named parameters are introduced using a dash (“-“) characters, so to see a list of available parameters, enter text as far as the dash and then press TAB:

Some of those are specific to the scaffolder; others are common PowerShell parameters. I’ll explain what the scaffolder-specific ones do later.

Sunday, February 23, 2014

Behavior-Driven Development with SpecFlow and WatiN

As automated unit testing becomes more ubiquitous in software development, so does the adoption of various test-first methods. These practices each present a unique set of opportunities and challenges to development teams, but all strive to establish a “testing as design” mindset with practitioners.

For much of the test-first era, however, the method for expressing the behavior of a user has been through unit tests written in the language of the system—a language disconnected from that of the user. With the advent of Behavior-Driven Development (BDD) techniques, this dynamic is changing. Using BDD techniques you can author automated tests in the language of the business, all while maintaining a connection to your implemented system.

Of course, a number of tools have been created to help you implement BDD in your development process. These include Cucumber in Ruby and SpecFlow and WatiN for the Microsoft .NET Framework. SpecFlow helps you write and execute specifications within Visual Studio, while WatiN enables you to drive the browser for automated end-to-end system testing.

In this article, I’ll provide a brief overview of BDD and then explain how the BDD cycle wraps the traditional Test-Driven Development (TDD) cycle with feature-level tests that drive unit-level implementation. Once I’ve laid the groundwork for test-first methods, I’ll introduce SpecFlow and WatiN and show you examples of how these tools can be used with MSTest to implement BDD for your projects.

.jpg)

Figure 1 The Test-Driven Development Cycle

Many developers and teams have had great success with TDD. Others have not, and find that they struggle with managing the process over time, especially as the volume of tests begins to grow and the flexibility of those tests begins to degrade. Some aren’t sure how to start with TDD, while others find TDD easy to initiate, only to watch it abandoned as deadlines near and large backlogs loom. Finally, many interested developers meet resistance to the practice within their organizations, either because the word “test” implies a function that belongs on another team or because of the false perception that TDD results in too much extra code and slows down projects.

Steve Freeman and Nat Pryce, in their book, “Growing Object-Oriented Software, Guided by Tests” (Addison-Wesley Professional, 2009), note that “traditional” TDD misses some of the benefits of true test-first development:

“It is tempting to start the TDD process by writing unit tests for classes in the application. This is better than having no tests at all and can catch those basic programming errors that we all know but find so hard to avoid … But a project with only unit tests is missing out on critical benefits of the TDD process. We’ve seen projects with high-quality, well unit-tested code that turned out not to be called from anywhere, or that could not be integrated with the rest of the system and had to be rewritten.”

In 2006, Dan North documented many of these challenges in an article in Better Software magazine (dannorth.net/introducing-bdd). In his article, North described a series of practices that he had adopted over the prior three years while in the trenches with testing. While still TDD by definition, these practices led North to adopt a more analysis-centric view of testing and to coin the term Behavior-Driven Development to encapsulate this shift.

One popular application of BDD attempts to extend TDD by tightening the focus and process of creating tests through Acceptance Tests, or executable specifications. Each specification serves as an entry point into the development cycle and describes, from the user’s point of view and in a step-by-step form, how the system should behave. Once written, the developer uses the specification and their existing TDD process to implement just enough production code to yield a passing scenario (see Figure 2).

.jpg)

Figure 2 The Behavior-Driven Development Cycle

Let’s look at an example of the difference. In a traditional TDD practice, you could write the test in Figure 3 to exercise the Create method of a CustomersController.

Figure 3 Unit Test for Creating a Customer

With TDD, this tends to be one of the first tests I write. I design a public API to my CustomersController object by setting expectations of how it will behave. With BDD I still create that test, but not at first. Instead, I elevate the focus to feature-level functionality by writing something more like Figure 4. I then use that scenario as guidance toward implementing each unit of code needed to make this scenario pass.

Figure 4 Feature-Level Specification

This is the outer loop in Figure 2, the failing Acceptance Test. Once this test has been created and fails, I implement each step of each scenario in my feature by following the inner TDD loop depicted in Figure 2. In the case of the CustomersController in Figure 3, I’ll write this test once I reach the proper step in my feature, but before I implement the controller logic needed to make that step pass.

Cucumber tests are written using User Story syntax for each feature file and a Given, When, Then (GWT) syntax for each scenario. (For details on User Story syntax, see c2.com/cgi/wiki?UserStory.) GWT describes the current context of the scenario (Given), the actions taken as a part of the test (When) and the expected, observable results (Then). The feature in Figure 4 is an example of such syntax.

In Cucumber, user-readable feature files are parsed, and each scenario step is matched to Ruby code that exercises the public interfaces of the system in question and determines if that step passes or fails.

In recent years, innovations enabling the use of scenarios as automated tests have extended into the .NET Framework ecosystem. Developers now have tools that enable specifications to be written using the same structured English syntax that Cucumber utilizes, and which can then use those specifications as tests that exercise the code. BDD testing tools like SpecFlow (specflow.org), Cuke4Nuke (github.com/richardlawrence/Cuke4Nuke) and others enable you to create executable specifications first in the process, leverage those specifications as you build out functionality and end with a documented feature that’s tied directly to your development and testing process.

Once you’ve added an AcceptanceTests project and added references to the TechTalk.SpecFlow assembly, add a new Feature using the Add | New Item templates that SpecFlow creates on installation and name it CreateCustomer.feature.

Notice that the file is created with a .feature extension, and that Visual Studio recognizes this as a supported file, thanks to SpecFlow’s integrated tooling. You may also notice that your feature file has a related .cs code-behind file. Each time you save a .feature file, SpecFlow parses the file and converts the text in that file into a test fixture. The code in the associated .cs file represents that test fixture, which is the code that’s actually executed each time you run your test suite.

By default, SpecFlow uses NUnit as its test-runner, but it also supports MSTest with a simple configuration change. All you need to do is add an app.config file to your test project and add the following elements:

Each feature file has two parts. The first part is the feature name and description at the top, which uses User Story syntax to describe the role of the user, the user’s goal, and the types of things the user needs to be able to do to achieve that goal in the system. This section is required by SpecFlow to auto-generate tests, but the content itself is not used in those tests.

The second part of each feature file is one or more scenarios. Each scenario is used to generate a test method in the associated .feature.cs file, as shown in Figure 5, and each step within a scenario is passed to the SpecFlow test runner, which performs a RegEx-based match of the step to an entry in a SpecFlow file called a Step Definition file.

Figure 5 Test Method Generated by SpecFlow

Once you’ve defined your first feature, Press Ctrl+R,T to run your SpecFlow tests. Your CreateCustomer test will fail as inconclusive because SpecFlow cannot find a matching step definition for the first step in your test (see Figure 6). Notice how the exception is reported in the actual .feature file, as opposed to the code-behind file.

.jpg)

Figure 6 SpecFlow Cannot Find a Step Definition

Because you haven’t yet created a Step Definition file, this exception is expected. Click OK on the exception dialog and look for the CreateABasicCustomerRecord test in the Visual Studio Test Results window. If a matching step isn’t found, SpecFlow uses your feature file to generate the code you need in your step definition file, which you can copy and use to begin implementing those steps.

In your AcceptanceTests project, create a step definition file using the SpecFlow Step Definition template and name it CreateCustomer.cs. Then copy the output from SpecFlow into the class. You’ll notice that each method is decorated with a SpecFlow attribute that designates the method as a Given, When or Then step, and provides the RegEx used to match the method to a step in the feature file.

One such tool is WatiN, an open source library for automating Web browser testing. You can download WatiN from watin.sourceforge.net and add a reference to WatiN.Core to your Acceptance Tests project to use it.

The primary way you interact with WatiN is through a browser object—either IE() or FireFox(), depending on your browser of choice—that provides a public interface to control an instance of an installed browser. Because you need to walk the browser through several steps in a scenario, you need a way to pass the same browser object between steps in the step definition class. To handle this, I usually create a WebBrowser static class as part of my AcceptanceTests project, and use that class to work with the WatiN IE object and the ScenarioContext that SpecFlow uses to store state between steps in a scenario:

The first step you’ll need to implement in CreateCustomer.cs is the Given step, which begins the test by logging the user into the site as an administrator:

Remember that the Given portion of a scenario is for setting up the context of the current test. With WatiN, you can have your test drive and interact with the browser to implement this step.

For this step, I use WatiN to open Internet Explorer, navigate to the Log On page of the site, fill out the User name and Password textboxes, and then click the Log On button on the screen. When I run the tests again, an Internet Explorer window will open automatically and I can observe WatiN in action as it interacts with the site, clicking links and entering text (see Figure 7).

.png)

Figure 7 The Browser on Autopilot with WatiN

The Given step will now pass and I’m a step closer to implementing the feature. SpecFlow will now fail on the first When step because the step is not yet implemented. You can implement it with the following code:

Now, when I run the tests again, they fail because WatiN cannot find a link with the text “Create New Customer” on the page. By simply adding a link with that text to the homepage, the next step will pass.

Sensing a pattern yet? SpecFlow encourages the same Red-Green-Refactor methodology that’s a staple of test-first development methods. The granularity of each step in a feature acts like virtual blinders for implementation, encouraging you to implement only the functionality that you need to make that step pass.

But what about TDD inside of the BDD process? I’m only working at the page level at this point, and I have yet to implement the functionality that actually creates the customer record. For the sake of brevity, let’s implement the rest of the steps now (see Figure 8).

Figure 8 Remaining Steps in the Step Definition

I re-run my tests, and things now fail because I don’t have a page to enter customer information. To allow customers to be created, I need a Create Customer View page. In order to deliver such a view in ASP.NET MVC, I need a CustomersController that delivers that view. I now need new code, which means I’m stepping from the outer loop of BDD and into the inner loop of TDD, as shown back in Figure 2.

The first step is to create a failing unit test.

This code doesn’t yet compile because you haven’t created CustomersController or its Create method. Upon creating that controller and an empty Create method, the code compiles and the test now fails, which is the desired next step. If you complete the Create method, the test now passes:

If you re-run the SpecFlow tests, you get a bit further, but the Feature still doesn’t pass. This time, the test will fail because you don’t have a Create.aspx view page. If you add it along with the proper fields as directed by the feature, you’ll move another step closer to a completed feature.

The outside-in process for implementing this Create functionality looks something like Figure 9.

.jpg)

Figure 9 Scenario-to-Unit Test Process

Those same steps will repeat themselves often in this process, and your speed in iterating over them will increase greatly over time, especially as you implement helper steps (clicking links and buttons, filling in forms and so on) in the AcceptanceTests project and get down to testing the key functionality in each scenario.

From a valid Create View, the Feature will now fill out the appropriate form fields and will attempt to submit the form. You can guess by now what happens next: The test will fail because you don’t yet have the logic needed to save the customer record.

Following the same process as before, create the test using the unit-test code shown earlier in Figure 3. After adding an empty Create method that accepts a customer object to allow this test to compile, you watch it fail, then complete the Create method like so:

My Controller is just a controller, and the actual creation of the customer record belongs to a Repository object that has knowledge of a data storage mechanism. I’ve left that implementation out of this article for brevity, but it’s important to note that, in a real scenario, the need for a repository to save a customer should kick off another sub-loop of unit testing. When you need access to any collaborating object and that object does not yet exist, or doesn’t offer the functionality you require, you should follow the same unit test loop that you’re following for your Feature and Controllers.

Once you’ve implemented the Create method and have a working repository, you’ll need to create the Details View, which takes the new customer record and displays it on the page. Then you can run SpecFlow once more. Finally, after many TDD loops and sub-loops, you now have a passing feature that proves out some end-to-end functionality in your system.

Congratulations! You’ve now implemented a unit of end-to-end functionality with an acceptance test and a complete set of unit tests that will ensure the new functionality will continue to work as your system expands to add new features.

Be on the lookout for opportunities to refactor the code in your AcceptanceTests project as well. You’ll find that some steps tend to be repeated often across several features, especially your Given steps. With SpecFlow, you can easily move these steps into separate Step Definition files organized by function, such as LogInSteps.cs. This leaves your main Step Definition files clean and targeted at the unique scenario you’re specifying.

BDD is about focus in design and development. By elevating your focus from an object to a feature, you enable yourself and your team to design from the perspective of the user of a system. As feature design becomes unit design, be sure to author tests with your feature in mind, and ensure the tests are guided by discrete steps or tasks.

Like any other practice or discipline, BDD takes time to fit into your workflow. I encourage you to try it out for yourself, using any of the available tools, and see how it works over time. As you develop in this style, pay attention to the questions that BDD encourages you to ask. Constantly pause and look for ways to improve your practice and process, and collaborate with others on ideas for improvement. My hope is that, no matter your toolset, the study of BDD adds value and focus to your own software development practice.

For much of the test-first era, however, the method for expressing the behavior of a user has been through unit tests written in the language of the system—a language disconnected from that of the user. With the advent of Behavior-Driven Development (BDD) techniques, this dynamic is changing. Using BDD techniques you can author automated tests in the language of the business, all while maintaining a connection to your implemented system.

Of course, a number of tools have been created to help you implement BDD in your development process. These include Cucumber in Ruby and SpecFlow and WatiN for the Microsoft .NET Framework. SpecFlow helps you write and execute specifications within Visual Studio, while WatiN enables you to drive the browser for automated end-to-end system testing.

In this article, I’ll provide a brief overview of BDD and then explain how the BDD cycle wraps the traditional Test-Driven Development (TDD) cycle with feature-level tests that drive unit-level implementation. Once I’ve laid the groundwork for test-first methods, I’ll introduce SpecFlow and WatiN and show you examples of how these tools can be used with MSTest to implement BDD for your projects.

A Brief History of Automated Testing

One of the most valuable practices to emerge from the Agile Software movement is an automated, test-first development style, often referred to as Test-Driven Development, or TDD. A key tenet of TDD is that test creation is as much about design and development guidance as it is about verification and regression. It’s also about using the test to specify a unit of required functionality, and using that test to then write only the code needed to deliver that functionality. Therefore, the first step in implementing any new functionality is to describe your expectations with a failing test (see Figure 1)..jpg)

Figure 1 The Test-Driven Development Cycle

Many developers and teams have had great success with TDD. Others have not, and find that they struggle with managing the process over time, especially as the volume of tests begins to grow and the flexibility of those tests begins to degrade. Some aren’t sure how to start with TDD, while others find TDD easy to initiate, only to watch it abandoned as deadlines near and large backlogs loom. Finally, many interested developers meet resistance to the practice within their organizations, either because the word “test” implies a function that belongs on another team or because of the false perception that TDD results in too much extra code and slows down projects.

Steve Freeman and Nat Pryce, in their book, “Growing Object-Oriented Software, Guided by Tests” (Addison-Wesley Professional, 2009), note that “traditional” TDD misses some of the benefits of true test-first development:

“It is tempting to start the TDD process by writing unit tests for classes in the application. This is better than having no tests at all and can catch those basic programming errors that we all know but find so hard to avoid … But a project with only unit tests is missing out on critical benefits of the TDD process. We’ve seen projects with high-quality, well unit-tested code that turned out not to be called from anywhere, or that could not be integrated with the rest of the system and had to be rewritten.”

In 2006, Dan North documented many of these challenges in an article in Better Software magazine (dannorth.net/introducing-bdd). In his article, North described a series of practices that he had adopted over the prior three years while in the trenches with testing. While still TDD by definition, these practices led North to adopt a more analysis-centric view of testing and to coin the term Behavior-Driven Development to encapsulate this shift.

One popular application of BDD attempts to extend TDD by tightening the focus and process of creating tests through Acceptance Tests, or executable specifications. Each specification serves as an entry point into the development cycle and describes, from the user’s point of view and in a step-by-step form, how the system should behave. Once written, the developer uses the specification and their existing TDD process to implement just enough production code to yield a passing scenario (see Figure 2).

.jpg)

Figure 2 The Behavior-Driven Development Cycle

Where Design Begins

BDD is considered by many a superset of TDD, not a replacement for it. The key difference is the focus on initial design and test creation. Rather than focusing on tests against units or objects, as with TDD, I focus on the goals of my users and the steps they take to achieve those goals. Because I’m no longer starting with tests of small units, I’m less inclined to speculate on fine-grained usage or design details. Rather, I’m documenting executable specifications that prove out my system. I still write unit tests, but BDD encourages an outside-in approach that starts with a full description of the feature to be implemented.Let’s look at an example of the difference. In a traditional TDD practice, you could write the test in Figure 3 to exercise the Create method of a CustomersController.

Figure 3 Unit Test for Creating a Customer

- [TestMethod]

- public void PostCreateShouldSaveCustomerAndReturnDetailsView() {

- var customersController = new CustomersController();

- var customer = new Customer {

- Name = "Hugo Reyes",

- Email = "hreyes@dharmainitiative.com",

- Phone = "720-123-5477"

- };

-

- var result = customersController.Create(customer) as ViewResult;

-

- Assert.IsNotNull(result);

- Assert.AreEqual("Details", result.ViewName);

- Assert.IsInstanceOfType(result.ViewData.Model, typeof(Customer));

-

- customer = result.ViewData.Model as Customer;

- Assert.IsNotNull(customer);

- Assert.IsTrue(customer.Id > 0);

- }

Figure 4 Feature-Level Specification

- Feature: Create a new customer

- In order to improve customer service and visibility

- As a site administrator

- I want to be able to create, view and manage customer records

-

- Scenario: Create a basic customer record

- Given I am logged into the site as an administrator

- When I click the "Create New Customer" link

- And I enter the following information

- | Field | Value |

- | Name | Hugo Reyes |

- | Email | hreyes@dharmainitiative.com |

- | Phone | 720-123-5477 |

- And I click the "Create" button

- Then I should see the following details on the screen:

- | Value |

- | Hugo Reyes |

- | hreyes@dharmainitiative.com |

- | 720-123-5477 |

BDD and Automated Testing

From the start, the BDD community has sought to provide the same level of automated testing with Acceptance Tests that has been the norm in unit testing for some time. One notable example is Cucumber (cukes.info), a Ruby-based testing tool that emphasizes the creation of feature-level Acceptance Tests written in a “business-readable, domain-specific language.”Cucumber tests are written using User Story syntax for each feature file and a Given, When, Then (GWT) syntax for each scenario. (For details on User Story syntax, see c2.com/cgi/wiki?UserStory.) GWT describes the current context of the scenario (Given), the actions taken as a part of the test (When) and the expected, observable results (Then). The feature in Figure 4 is an example of such syntax.

In Cucumber, user-readable feature files are parsed, and each scenario step is matched to Ruby code that exercises the public interfaces of the system in question and determines if that step passes or fails.

In recent years, innovations enabling the use of scenarios as automated tests have extended into the .NET Framework ecosystem. Developers now have tools that enable specifications to be written using the same structured English syntax that Cucumber utilizes, and which can then use those specifications as tests that exercise the code. BDD testing tools like SpecFlow (specflow.org), Cuke4Nuke (github.com/richardlawrence/Cuke4Nuke) and others enable you to create executable specifications first in the process, leverage those specifications as you build out functionality and end with a documented feature that’s tied directly to your development and testing process.

Getting Started with SpecFlow and WatiN

In this article, I’ll utilize SpecFlow to test a Model-View-Controller (MVC) application. To get started with SpecFlow, you’ll first need to download and install it. Once SpecFlow is installed, create a new ASP.NET MVC application with a unit test project. I prefer that my unit test project contain only unit tests (controller tests, repository tests and so on), so I also create an AcceptanceTests test project for my SpecFlow tests.Once you’ve added an AcceptanceTests project and added references to the TechTalk.SpecFlow assembly, add a new Feature using the Add | New Item templates that SpecFlow creates on installation and name it CreateCustomer.feature.

Notice that the file is created with a .feature extension, and that Visual Studio recognizes this as a supported file, thanks to SpecFlow’s integrated tooling. You may also notice that your feature file has a related .cs code-behind file. Each time you save a .feature file, SpecFlow parses the file and converts the text in that file into a test fixture. The code in the associated .cs file represents that test fixture, which is the code that’s actually executed each time you run your test suite.

By default, SpecFlow uses NUnit as its test-runner, but it also supports MSTest with a simple configuration change. All you need to do is add an app.config file to your test project and add the following elements:

<configSections>

<section name="specFlow"

type="TechTalk.SpecFlow.Configuration.ConfigurationSectionHandler, TechTalk.SpecFlow"/>

</configSections>

<specFlow>

<unitTestProvider name="MsTest" />

</specFlow>Your First Acceptance Test

When you create a new feature, SpecFlow populates that file with default text to illustrate the syntax used to describe a feature. Replace the default text in your CreateCustomer.feature file with the text in Figure 4.Each feature file has two parts. The first part is the feature name and description at the top, which uses User Story syntax to describe the role of the user, the user’s goal, and the types of things the user needs to be able to do to achieve that goal in the system. This section is required by SpecFlow to auto-generate tests, but the content itself is not used in those tests.

The second part of each feature file is one or more scenarios. Each scenario is used to generate a test method in the associated .feature.cs file, as shown in Figure 5, and each step within a scenario is passed to the SpecFlow test runner, which performs a RegEx-based match of the step to an entry in a SpecFlow file called a Step Definition file.

Figure 5 Test Method Generated by SpecFlow

- public virtual void CreateABasicCustomerRecord() {

- TechTalk.SpecFlow.ScenarioInfo scenarioInfo =

- new TechTalk.SpecFlow.ScenarioInfo(

- "Create a basic customer record", ((string[])(null)));

-

- this.ScenarioSetup(scenarioInfo);

- testRunner.Given(

- "I am logged into the site as an administrator");

- testRunner.When("I click the \"Create New Customer\" link");

-

- TechTalk.SpecFlow.Table table1 =

- new TechTalk.SpecFlow.Table(new string[] {

- "Field", "Value"});

- table1.AddRow(new string[] {

- "Name", "Hugo Reyesv"});

- table1.AddRow(new string[] {

- "Email", "hreyes@dharmainitiative.com"});

- table1.AddRow(new string[] {

- "Phone", "720-123-5477"});

-

- testRunner.And("I enter the following information",

- ((string)(null)), table1);

- testRunner.And("I click the \"Create\" button");

-

- TechTalk.SpecFlow.Table table2 =

- new TechTalk.SpecFlow.Table(new string[] {

- "Value"});

- table2.AddRow(new string[] {

- "Hugo Reyes"});

- table2.AddRow(new string[] {

- "hreyes@dharmainitiative.com"});

- table2.AddRow(new string[] {

- "720-123-5477"});

- testRunner.Then("I should see the following details on screen:",

- ((string)(null)), table2);

- testRunner.CollectScenarioErrors();

- }

.jpg)

Figure 6 SpecFlow Cannot Find a Step Definition

Because you haven’t yet created a Step Definition file, this exception is expected. Click OK on the exception dialog and look for the CreateABasicCustomerRecord test in the Visual Studio Test Results window. If a matching step isn’t found, SpecFlow uses your feature file to generate the code you need in your step definition file, which you can copy and use to begin implementing those steps.

In your AcceptanceTests project, create a step definition file using the SpecFlow Step Definition template and name it CreateCustomer.cs. Then copy the output from SpecFlow into the class. You’ll notice that each method is decorated with a SpecFlow attribute that designates the method as a Given, When or Then step, and provides the RegEx used to match the method to a step in the feature file.

Integrating WatiN for Browser Testing

Part of the goal with BDD is to create an automated test suite that exercises as much end-to-end system functionality as possible. Because I’m building an ASP.NET MVC application, I can use tools that help script the Web browser to interact with the site.One such tool is WatiN, an open source library for automating Web browser testing. You can download WatiN from watin.sourceforge.net and add a reference to WatiN.Core to your Acceptance Tests project to use it.

The primary way you interact with WatiN is through a browser object—either IE() or FireFox(), depending on your browser of choice—that provides a public interface to control an instance of an installed browser. Because you need to walk the browser through several steps in a scenario, you need a way to pass the same browser object between steps in the step definition class. To handle this, I usually create a WebBrowser static class as part of my AcceptanceTests project, and use that class to work with the WatiN IE object and the ScenarioContext that SpecFlow uses to store state between steps in a scenario:

- public static class WebBrowser {

- public static IE Current {

- get {

- if (!ScenarioContext.Current.ContainsKey("browser"))

- ScenarioContext.Current["browser"] = new IE();

- return ScenarioContext.Current["browser"] as IE;

- }

- }

- }

[Given(@"I am logged into the site as an administrator")]

public void GivenIAmLoggedIntoTheSiteAsAnAdministrator() {

WebBrowser.Current.GoTo(http://localhost:24613/Account/LogOn);

WebBrowser.Current.TextField(Find.ByName("UserName")).TypeText("admin");

WebBrowser.Current.TextField(Find.ByName("Password")).TypeText("pass123");

WebBrowser.Current.Button(Find.ByValue("Log On")).Click();

Assert.IsTrue(WebBrowser.Current.Link(Find.ByText("Log Off")).Exists);

}For this step, I use WatiN to open Internet Explorer, navigate to the Log On page of the site, fill out the User name and Password textboxes, and then click the Log On button on the screen. When I run the tests again, an Internet Explorer window will open automatically and I can observe WatiN in action as it interacts with the site, clicking links and entering text (see Figure 7).

.png)

Figure 7 The Browser on Autopilot with WatiN

The Given step will now pass and I’m a step closer to implementing the feature. SpecFlow will now fail on the first When step because the step is not yet implemented. You can implement it with the following code:

- [When("I click the \" (.*)\" link")]

- public void WhenIClickALinkNamed(string linkName) {

- var link = WebBrowser.Link(Find.ByText(linkName));

-

- if (!link.Exists)

- Assert.Fail(string.Format(

- "Could not find {0} link on the page", linkName));

-

- link.Click();

- }

Sensing a pattern yet? SpecFlow encourages the same Red-Green-Refactor methodology that’s a staple of test-first development methods. The granularity of each step in a feature acts like virtual blinders for implementation, encouraging you to implement only the functionality that you need to make that step pass.

But what about TDD inside of the BDD process? I’m only working at the page level at this point, and I have yet to implement the functionality that actually creates the customer record. For the sake of brevity, let’s implement the rest of the steps now (see Figure 8).

Figure 8 Remaining Steps in the Step Definition

- [When(@"I enter the following information")]

- public void WhenIEnterTheFollowingInformation(Table table) {

- foreach (var tableRow in table.Rows) {

- var field = WebBrowser.TextField(

- Find.ByName(tableRow["Field"]));

-

- if (!field.Exists)

- Assert.Fail(string.Format(

- "Could not find {0} field on the page", field));

- field.TypeText(tableRow["Value"]);

- }

- }

-

- [When("I click the \"(.*)\" button")]

- public void WhenIClickAButtonWithValue(string buttonValue) {

- var button = WebBrowser.Button(Find.ByValue(buttonValue));

-

- if (!button.Exists)

- Assert.Fail(string.Format(

- "Could not find {0} button on the page", buttonValue));

-

- button.Click();

- }

-

- [Then(@"I should see the following details on the screen:")]

- public void ThenIShouldSeeTheFollowingDetailsOnTheScreen(

- Table table) {

- foreach (var tableRow in table.Rows) {

- var value = tableRow["Value"];

-

- Assert.IsTrue(WebBrowser.ContainsText(value),

- string.Format(

- "Could not find text {0} on the page", value));

- }

- }

The first step is to create a failing unit test.

Writing Unit Tests to Implement Steps

After creating a CustomerControllersTests test class in the UnitTest project, you need to create a test method that exercises the functionality to be exposed in the CustomersController. Specifically, you want to create a new instance of the Controller, call its Create method and ensure that you receive the proper View and Model in return:

- [TestMethod]

- public void GetCreateShouldReturnCustomerView() {

- var customersController = new CustomersController();

- var result = customersController.Create() as ViewResult;

-

- Assert.AreEqual("Create", result.ViewName);

- Assert.IsInstanceOfType(

- result.ViewData.Model, typeof(Customer));

- }

- public ActionResult Create() {

- return View("Create", new Customer());

- }

The outside-in process for implementing this Create functionality looks something like Figure 9.

.jpg)

Figure 9 Scenario-to-Unit Test Process

Those same steps will repeat themselves often in this process, and your speed in iterating over them will increase greatly over time, especially as you implement helper steps (clicking links and buttons, filling in forms and so on) in the AcceptanceTests project and get down to testing the key functionality in each scenario.

From a valid Create View, the Feature will now fill out the appropriate form fields and will attempt to submit the form. You can guess by now what happens next: The test will fail because you don’t yet have the logic needed to save the customer record.

Following the same process as before, create the test using the unit-test code shown earlier in Figure 3. After adding an empty Create method that accepts a customer object to allow this test to compile, you watch it fail, then complete the Create method like so:

[AcceptVerbs(HttpVerbs.Post)]

public ActionResult Create(Customer customer) {

_repository.Create(customer);

return View("Details", customer);

}Once you’ve implemented the Create method and have a working repository, you’ll need to create the Details View, which takes the new customer record and displays it on the page. Then you can run SpecFlow once more. Finally, after many TDD loops and sub-loops, you now have a passing feature that proves out some end-to-end functionality in your system.

Congratulations! You’ve now implemented a unit of end-to-end functionality with an acceptance test and a complete set of unit tests that will ensure the new functionality will continue to work as your system expands to add new features.

A Word About Refactoring

Hopefully, as you create unit-level tests in your UnitTests project, you’re constantly refactoring with each test creation. As you move back up the chain from passing unit tests to a passing acceptance test, you should follow the same process, watching for opportunities to refactor and refine your implementation for each feature and all the features that come after.Be on the lookout for opportunities to refactor the code in your AcceptanceTests project as well. You’ll find that some steps tend to be repeated often across several features, especially your Given steps. With SpecFlow, you can easily move these steps into separate Step Definition files organized by function, such as LogInSteps.cs. This leaves your main Step Definition files clean and targeted at the unique scenario you’re specifying.

BDD is about focus in design and development. By elevating your focus from an object to a feature, you enable yourself and your team to design from the perspective of the user of a system. As feature design becomes unit design, be sure to author tests with your feature in mind, and ensure the tests are guided by discrete steps or tasks.

Like any other practice or discipline, BDD takes time to fit into your workflow. I encourage you to try it out for yourself, using any of the available tools, and see how it works over time. As you develop in this style, pay attention to the questions that BDD encourages you to ask. Constantly pause and look for ways to improve your practice and process, and collaborate with others on ideas for improvement. My hope is that, no matter your toolset, the study of BDD adds value and focus to your own software development practice.

Saturday, February 8, 2014

C# Volatile Constructs

Volatile

Constructs

Back

in the early days of computing, software was written using assembly language.

Assembly

language

is very tedious, because programmers must explicitly state everything—use this

CPU register

for

this, branch to that, call indirect through this other thing, and so on. To

simplify programming,

higher-level

languages were introduced. These higher-level languages introduced common

useful

constructs,

like if/else, switch/case, various loops, local

variables, arguments, virtual method calls,

operator

overloads, and much more. Ultimately, these language compilers must convert the

high-level

constructs

down to the low-level constructs so that the computer can actually do what you

want it to

do.

In

other words, the C# compiler translates your C# constructs into Intermediate

Language (IL),

which

is then converted by the just-in-time (JIT) compiler into native CPU

instructions, which must then

be

processed by the CPU itself. In addition, the C# compiler, the JIT compiler,

and even the CPU itself

can

optimize your code. For example, the following ridiculous method can ultimately

be compiled into

nothing:

private

static void OptimizedAway() {

//

Constant expression is computed at compile time resulting in zero

Int32

value = (1 * 100) - (50 * 2);

// If

value is 0, the loop never executes

for

(Int32 x = 0; x < value; x++) {

// There

is no need to compile the code in the loop since it can never execute

Console.WriteLine("Jeff");

}

}

In

this code, the compiler can see that value will always be 0; therefore, the loop

will never execute

and

consequently, there is no need to compile the code inside the loop. This method

could be

compiled

down to nothing. In fact, when JITting a method that calls OptimizedAway, the JITter will try

to

inline the OptimizedAway

method’s

code. Since there is no code, the JITter will even remove the

code

that tries to call OptimizedAway. We love this feature of compilers. As

developers, we get to

write

the code in the way that makes the most sense to us. The code should be easy to

write, read, and

maintain.

Then compilers translate our intentions into machine-understandable code. We

want our

compilers

to do the best job possible for us.

When

the C# compiler, JIT compiler, and CPU optimize our code, they guarantee us

that the

intention of the code

is preserved. That is, from a single-threaded perspective, the method does what

we

want it to do, although it may not do it exactly the way we described in our

source code. However,

the

intention might not be preserved from a multithreaded perspective. Here is an

example where the

optimizations

make the program not work as expected:

internal

static class StrangeBehavior {

// As

you'll see later, mark this field as volatile to fix the problem

private

static Boolean s_stopWorker = false;

public

static void Main() {

Console.WriteLine("Main:

letting worker run for 5 seconds");

Thread t

= new Thread(Worker);

t.Start();

Thread.Sleep(5000);

s_stopWorker

= true;

Console.WriteLine("Main:

waiting for worker to stop");

t.Join();

}

private

static void Worker(Object o) {

Int32 x

= 0;

while

(!s_stopWorker) x++;

Console.WriteLine("Worker:

stopped when x={0}", x);

}

}

In

this code, the Main

method

creates a new thread that executes the Worker method. This Worker

method

counts as high as it can before being told to stop. The Main method allows the Worker thread

to

run for 5 seconds before telling it to stop by setting the static

Boolean field

to true. At this

point,

the Worker

thread

should display what it counted up to, and then the thread will terminate. The

Main

thread

waits for the Worker

thread to

terminate by calling Join, and then the Main thread

returns,

causing the whole process to terminate.

Looks

simple enough, right? Well, the program has a potential problem due to all the

optimizations

that

could happen to it. You see, when the Worker method is compiled,

the compiler sees that

s_stopWorker

is either

true

or false, and it also sees

that this value never changes inside the

Worker

method

itself. So the compiler could produce code that checks s_stopWorker

first. If

s_stopWorker

is true, then “Worker:

stopped when x=0” will be displayed. If s_stopWorker

is

false, then the compiler

produces code that enters an infinite loop that increments x forever. You

see,

the optimizations cause the loop to run very fast because checking s_stopWorker

only

occurs

once

before the loop; it does not get checked with each iteration of the loop.

If

you actually want to see this in action, put this code in a .cs file and

compile the code using C#’s

/platform:x86

and /optimize+

switches.

Then run the resulting EXE file, and you’ll see that the

program

runs forever. Note that you have to compile for x86 ensuring that the x86 JIT

compiler is used

at

runtime. The x86 JIT compiler is more mature than the x64 JIT compiler, so it

performs more

aggressive

optimizations. The x64 JIT compiler does not perform this particular

optimization, and

therefore

the program runs to completion. This highlights another interesting point about

all of this.

Whether your program

behaves as expected depends on a lot of factors, such as which compiler

version

and compiler switches are used, which JIT compiler is used, and which CPU your

code is

running

on. In addition, to see the program above run forever, you must not run the

program under a

debugger

because the debugger causes the JIT compiler to produce unoptimized code that

is easier to

step

through.

Let’s

look at another example, which has two threads that are both accessing two

fields:

internal

sealed class ThreadsSharingData {

private

Int32 m_flag = 0;

private

Int32 m_value = 0;

// This

method is executed by one thread

public

void Thread1() {

// Note:

These could execute in reverse order

m_value

= 5;

m_flag =

1;

}

// This

method is executed by another thread

public

void Thread2() {

// Note:

m_value could be read before m_flag

if

(m_flag == 1)

Console.WriteLine(m_value);

}

}

The

problem with this code is that the compilers/CPU could translate the code in

such a way as to

reverse

the two lines of code in the Thread1 method. After all, reversing the two lines of

code does

not

change the intention of the method. The method needs to get a 5 in m_value and a 1 in m_flag.

From

a single-threaded application’s perspective, the order of executing this code

is unimportant. If

these

two lines do execute in reverse order, then another thread executing the Thread2 method could

see

that m_flag

is 1 and then display 0.

Let’s

look at this code another way. Let’s say that the code in the Thread1 method executes in

program

order (the way it was written). When compiling the code in the Thread2 method, the

compiler

must generate code to read m_flag and m_value from RAM into CPU registers. It is possible

that

RAM will deliver the value of m_value first, which would contain a 0. Then the Thread1 method

could

execute, changing m_value to 5 and m_flag to 1. But Thread2’s CPU register

doesn’t see that

m_value

has been

changed to 5

by this

other thread, and then the value in m_flag could be read

from

RAM into a CPU register and the value of m_flag becomes 1 now, causing Thread2 to again

display

0.

This

is all very scary stuff and is more likely to cause problems in a release build

of your program

than

in a debug build of your program, making it particularly tricky to detect these

problems and

correct your code.

Now, let’s talk about how to correct your code.

The

static System.Threading.Volatile

class

offers two static methods that look like this:68

public

static class Volatile {

public

static void Write(ref Int32 location, Int32 value);

public

static Int32 Read(ref Int32 location);

}

These

methods are special. In effect, these methods disable some optimizations

usually performed

by

the C# compiler, the JIT compiler, and the CPU itself. Here’s how the methods

work:

• The Volatile.Write

method

forces the value in location to be written to at the point of

the

call. In addition, any earlier program-order loads and stores must occur before

the call to

Volatile.Write.

• The Volatile.Read

method

forces the value in location to be read from at the point of the

call.

In addition, any later program-order loads and stores must occur after the call

to

Volatile.Read.

Important

I know

that this can be very confusing, so let me summarize it as a simple rule. When

threads

are communicating with each other via shared memory, write the last value by

calling

Volatile.Write

and read

the first value by calling Volatile.Read.

So

now we can fix the ThreadsSharingData class using these methods:

internal

sealed class ThreadsSharingData {

private

Int32 m_flag = 0;

private

Int32 m_value = 0;

// This

method is executed by one thread

public

void Thread1() {

// Note:

5 must be written to m_value before 1 is written to m_flag

m_value

= 5;

Volatile.Write(ref

m_flag, 1);

}

// This

method is executed by another thread

public

void Thread2() {

// Note:

m_value must be read after m_flag is read

if

(Volatile.Read(ref m_flag) == 1)

Console.WriteLine(m_value);

}

}

First,

notice that we are following the rule. The Thread1 method writes two

values out to fields that

68

There are

also overloads of Read and Write that operate on the following types: Boolean, (S)Byte, (U)Int16,

UInt32, (U)Int64, (U)IntPtr, Single, Double, and T where T is a generic type

constrained to ‘class’

(reference types).

are

shared by multiple threads. The last value that we want written (setting m_flag to 1) is performed

by

calling Volatile.Write. The Thread2 method reads two

values from fields shared by multiple

threads,

and the first value being read (m_flag) is performed by calling Volatile.Read.

But

what is really happening here? Well, for the Thread1 method, the Volatile.Write

call

ensures

that all the writes above it are completed before a 1 is written to m_flag. Since m_value =

5 is

before

the call to Volatile.Write, it must complete

first. In fact, if there were many variables being

modified

before the call to Volatile.Write, they would all have to complete before 1 is written to

m_flag. Note that the writes

before the call to Volatile.Write can be optimized to execute in any

order;

it’s just that all the writes have to complete before the call to Volatile.Write.

For

the Thread2

method,

the Volatile.Read

call

ensures that all variable reads after it start after

the

value in m_flag

has been

read. Since reading m_value is after the call to Volatile.Read, the

value

must be read after having read the value in m_flag. If there were many

reads after the call to

Volatile.Read, they would all have

to start after the value in m_flag has been read. Note that the

reads

after the call to Volatile.Read can be optimized to execute in any order;

it’s just that the reads

can’t start happening

until after the call to Volatile.Read.

C#’s

Support for Volatile Fields

Making

sure that programmers call the Volatile.Read and Volatile.Write methods correctly is a

lot

to ask. It’s hard for programmers to keep all of this in their minds and to

start imagining what other

threads

might be doing to shared data in the background. To simplify this, the C#

compiler has the

volatile

keyword,

which can be applied to static or instance fields of any of these types: Boolean,

(S)Byte, (U)Int16, (U)Int32, (U)IntPtr,

Single,

or Char. You can also apply

the volatile

keyword

to reference types and any enum field so long as the enumerated type has an

underlying type

of

(S)Byte, (U)Int16, or (U)Int32. The JIT compiler

ensures that all accesses to a volatile field are

performed

as volatile reads and writes, so that it is not necessary to explicitly call Volatile's static

Read

or Write methods. Furthermore,

the volatile

keyword

tells the C# and JIT compilers not to

cache

the field in a CPU register, ensuring that all reads to and from the field

actually cause the value

to

be read from memory.

Using

the volatile

keyword,

we can rewrite the ThreadsSharingData class as follows:

internal

sealed class ThreadsSharingData {

private

volatile Int32 m_flag = 0;

private

Int32 m_value = 0;

// This

method is executed by one thread

public

void Thread1() {

// Note:

5 must be written to m_value before 1 is written to m_flag

m_value

= 5;

m_flag =

1;

}

// This

method is executed by another thread

public void Thread2() {

// Note:

m_value must be read after m_flag is read

if

(m_flag == 1)

Console.WriteLine(m_value);

}

}

There

are some developers (and I am one of them) who do not like C#’s volatile keyword, and

they

think that the language should not provide it.69 Our thinking is that most algorithms require

few

volatile

read or write accesses to a field and that most other accesses to the field can

occur normally,

improving

performance; seldom is it required that all accesses to a field be volatile.

For example, it is

difficult

to interpret how to apply volatile read operations to algorithms like this one:

m_amount

= m_amount + m_amount; // Assume m_amount is a volatile field defined in a

class

Normally,

an integer number can be doubled simply by shifting all bits left by 1 bit, and

many

compilers

can examine the code above and perform this optimization. However, if m_amount is a

volatile

field,

then this optimization is not allowed. The compiler must produce code to read

m_amount

into a

register and then read it again into another register, add the two registers together,

and

then write the result back out to the m_amount field. The unoptimized

code is certainly bigger and

slower;

it would be unfortunate if it were contained inside a loop.

Furthermore,

C# does not support passing a volatile field by reference to a method. For example,

if

m_amount

is

defined as a volatile

Int32,

attempting to call Int32’s TryParse method causes

the

compiler to generate a warning as shown here:

Boolean

success = Int32.TryParse("123", out m_amount);

// The

above line causes the C# compiler to generate a warning:

//

CS0420: a reference to a volatile field will not be treated as volatile

Finally,

volatile fields are not Common Language Specification (CLS) compliant because

many

languages (including

Visual Basic) do not support them.

Subscribe to:

Comments (Atom)